Introduction

Generative AI in healthcare is ushering in a new era—where clinicians could start their day not by combing through years of scattered medical records, but by reviewing a neatly summarized, chronologically ordered patient history generated in seconds. This transformative technology holds the promise to reduce clinician burnout, speed up medical research, and deliver personalized care at an unprecedented scale.

Yet, despite the excitement, the real-world impact of generative AI in healthcare remains a nuanced mix of immense potential and serious challenges. This article aims to cut through the hype and offer a clear, evidence-based look at what generative AI can realistically achieve in clinical settings—and where it still falls short. Whether you’re a doctor, tech developer, or healthcare administrator, you’ll find insights into how this technology is shaping the future of medicine.

Table of Contents

For Clinicians:

Understand how generative AI in healthcare can realistically reduce administrative burden and augment diagnostic processes—without threatening your indispensable role. We’ll examine how this technology can become a co-pilot, not a replacement.

For Technologists:

Gain insight into the specific clinical needs, data challenges (e.g., EHR integration), and technical models like GANs and LLMs that are powering generative AI in healthcare. We’ll explore the demands of deploying AI safely and effectively in a high-stakes, regulated environment.

For Administrators:

Evaluate the ROI, implementation hurdles, and critical ethical and regulatory frameworks surrounding generative AI in healthcare. We’ll provide a strategic roadmap for adopting this technology responsibly across your organization.

The Core Technology: What is Generative AI in Healthcare?

To evaluate its impact, we must first establish a common understanding of what Generative AI is. At its core, it represents a fundamental shift from analyzing data to creating it.

What is Generative AI?

Generative AI in healthcare refers to a class of artificial intelligence capable of creating new, original content—such as clinical text, diagnostic images, or synthetic patient data—rather than just analyzing or classifying existing information. This is achieved through models that learn underlying patterns from vast medical datasets. According to the U.S. Government Accountability Office, these AI systems are trained on “millions to trillions of data points,” including anonymized clinical notes, medical literature, and imaging data (GAO, 2024).

Key Models Powering Medical Applications

Two primary types of models are driving the current wave of innovation in healthcare:

- Large Language Models (LLMs): These are the engines behind tools like ChatGPT. In healthcare, they excel at understanding, summarizing, and generating human-like text. Applications include drafting clinical notes from doctor-patient conversations, summarizing complex research papers, or generating discharge summaries, significantly reducing the administrative burden on clinicians (SyS Creations, 2025).

- Generative Adversarial Networks (GANs) & Variational Autoencoders (VAEs): These models are pivotal in medical imaging. GANs, for instance, consist of two competing neural networks—a generator that creates images and a discriminator that evaluates them—to produce highly realistic synthetic images. This is crucial for augmenting limited datasets for rare diseases, enhancing image resolution (e.g., creating 3D models from 2D scans), and identifying subtle anomalies in neuroimaging to detect complex disease patterns like those in Alzheimer’s disease (PMC, 2023).

Generative AI vs. Traditional Predictive AI

The distinction between generative and traditional AI is crucial for understanding its unique value proposition.

| Attribute | Traditional AI (Predictive/Analytical) | Generative AI (Creative/Synthetic) |

|---|---|---|

| Primary Function | Classification, Prediction, Detection | Creation, Synthesis, Generation |

| Example Question | “Does this X-ray show a tumor? (Yes/No)” | “Generate a draft radiology report describing the findings in this X-ray.” |

| Core Task | Analyzes existing data to find patterns and make predictions. | Uses learned patterns to create entirely new, contextually relevant content. |

| Clinical Use Case | Predicting patient readmission risk based on historical data. | Generating a personalized patient education handout about a specific condition. |

Clinical Reality: Real-World Use Cases and Applications

Moving from theory to practice, Generative AI is already being deployed to solve tangible problems across the healthcare ecosystem. Here are four key areas where it is making a measurable impact.

Streamlining Clinical Documentation and Reducing Burnout

The Problem: Clinicians are drowning in administrative work. Studies show that for every hour spent with patients, physicians can spend over an hour on EHR tasks, a major contributor to professional burnout (SyS Creations, 2025).

The Generative AI Solution: By leveraging generative AI in healthcare, systems can automatically transcribe and structure clinical conversations, draft documentation, and generate referral letters or discharge summaries. This reduces the clerical burden, allowing doctors to focus more on patient care and less on paperwork.

Evidence & Examples: Tools like AI-Rad Companion are being used to auto-generate descriptive findings from radiology scans, saving radiologists valuable time (PMC, 2024). Similarly, major LLMs like GPT-4 are being tested and deployed for a range of medical documentation tasks, demonstrating the potential to significantly improve administrative efficiency.

Augmenting Diagnostics and Treatment Planning

The Problem: Diagnosing complex conditions requires synthesizing vast amounts of disparate data from EHRs, imaging, and genomics. This process is time-consuming and susceptible to human cognitive biases.

The Generative AI Solution: Generative AI in healthcare can analyze a patient’s complete dataset—including medical history, genetics, and lifestyle factors—to detect subtle patterns and suggest personalized treatment options (InterSystems). In fields like neuroimaging, AI-driven models such as GANs can highlight anomalies often missed by traditional diagnostic tools, supporting more precise and timely clinical decisions. (PMC, 2023).

Evidence & Examples: Research has demonstrated the use of GANs to improve the quality of CT image reconstruction from incomplete data, reducing artifacts and leading to clearer images for diagnosis (PMC, 2024). This shows a direct application in enhancing the raw materials clinicians use for decision-making.

Accelerating Drug Discovery and Clinical Trials

The Problem: The traditional drug development pipeline is notoriously slow and expensive, with costs averaging over $1 billion per new drug and timelines often exceeding a decade.

The Generative AI Solution: By applying generative AI in healthcare, researchers can design novel molecules, predict drug interactions, and simulate early-stage clinical trials using synthetic patient data. This approach dramatically shortens development cycles and reduces dependency on physical trial participants, while improving drug safety and efficacy forecasting.

Evidence & Examples: Companies like Atomwise use AI to predict molecule behavior, accelerating the identification of potential drug candidates for diseases like Ebola and multiple sclerosis (John Snow Labs, 2025). By simulating how combinations of drugs will interact, these models help researchers design more effective therapies with fewer adverse effects, a task that is immensely complex for human researchers alone (LeewayHertz).

Enhancing Patient Engagement and Education

The Problem: Many patients struggle to understand complex health information or follow through with treatment plans, often due to limited access and unclear communication.

The Generative AI Solution: Generative AI in healthcare powers intelligent virtual assistants and personalized health content. These tools provide 24/7 support, answer medical questions in plain language, send medication reminders, and generate tailored educational resources based on a patient’s condition and health literacy—ultimately improving engagement and outcomes.

Evidence & Examples: Apps like Ada guide users by analyzing their symptoms and suggesting appropriate next steps, acting as a “mini doctor in your pocket” (SyS Creations, 2025). These tools can also create tailored nutrition and exercise plans based on an individual’s health status and goals, which has been shown to improve adherence to healthy lifestyle recommendations (InterSystems).

The Balanced View: A Critical Look at Pros and Cons

Despite its transformative potential, the adoption of Generative AI in healthcare is not without significant risks and challenges. A balanced perspective is essential for responsible implementation.

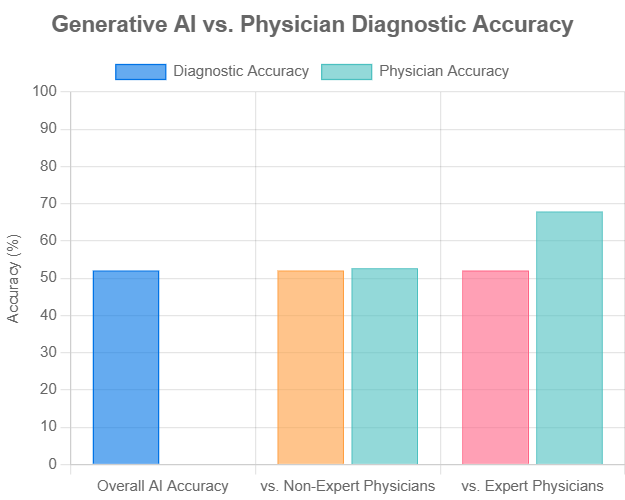

Figure 1: A meta-analysis of 83 studies shows Generative AI’s diagnostic accuracy is comparable to non-expert physicians but still lags behind expert physicians. Data sourced from Nature Digital Medicine (2025).

| Area of Impact | The Promise (Potential Benefits) | The Reality Check (Challenges & Risks) |

|---|---|---|

| Clinical Efficiency | – Automates repetitive tasks (documentation, scheduling), freeing up clinician time for direct patient care. – Speeds up data analysis for faster decision-making. | – AI Hallucinations: Models can generate factually incorrect or fabricated information, requiring rigorous human-in-the-loop verification (PMC, 2024). – Integration Complexity: Difficult and costly to integrate with legacy EHR systems. |

| Diagnostic Accuracy | – Analyzes complex datasets (genomics, imaging) to detect subtle patterns missed by the human eye. – Provides a “second opinion” to reduce diagnostic errors. | – Algorithmic Bias: Models trained on non-diverse data can perpetuate and amplify health disparities (CDC, 2024). – The “Black Box” Problem: The reasoning behind an AI’s suggestion can be opaque, making it hard for clinicians to trust or verify. |

| Personalized Medicine | – Generates tailored treatment plans based on an individual’s unique genetic, lifestyle, and medical data. – Creates personalized patient education materials. | – Data Privacy & Security: Requires access to vast amounts of sensitive patient data, posing significant HIPAA/GDPR compliance and security risks (PMC, 2024). – Inaccurate Personalization: A flawed model could suggest harmful or ineffective treatments. |

| Research & Development | – Drastically accelerates drug discovery and simulates clinical trials with synthetic data. – Augments rare disease research by generating data where little exists. | – Validation Gap: Most tools remain largely untested in real-world clinical settings. A meta-analysis showed AI is not yet superior to expert physicians (Nature, 2025). – Regulatory Hurdles: The path to FDA approval for adaptive AI systems is complex and not yet fully defined (PMC, 2025). |

Navigating the Future: Ethical and Regulatory Imperatives

Beyond technical hurdles, the most significant barriers to the widespread, safe adoption of Generative AI are ethical and regulatory. Addressing these issues head-on is non-negotiable.

The Ethical Minefield: Bias, Privacy, and Accountability

- Bias and Fairness: Algorithmic bias is a critical threat to health equity. If an AI model is trained predominantly on data from one demographic, its performance may be less accurate for underrepresented populations, leading to misdiagnoses and perpetuating systemic disparities. Mitigating this requires a conscious effort to build diverse and representative training datasets and employ fairness-aware algorithms (PMC, 2024).

- Privacy and Data Security: The power of these models is derived from vast amounts of sensitive patient data. This creates significant risks of data breaches and misuse. Strict adherence to privacy regulations like HIPAA and GDPR, coupled with robust security protocols like encryption and data anonymization, is paramount to maintaining patient trust and autonomy.

- Accountability: A crucial, unresolved question looms: If an AI system contributes to a medical error, who is liable? Is it the software developer, the hospital that deployed it, or the clinician who acted on its suggestion? Establishing clear governance frameworks and lines of responsibility is essential before these tools can be fully integrated into high-stakes clinical workflows.

The Regulatory Landscape: Charting a Path to Approval

The Challenge for Regulators: Traditional medical device approval processes were designed for static hardware and software. They are ill-suited for adaptive AI models that continuously learn and evolve from new data. This creates a significant regulatory gap.

Current State: Agencies like the U.S. Food and Drug Administration (FDA) are actively developing new frameworks for AI/ML-based software. However, regulatory uncertainty remains a major challenge for developers and healthcare organizations, slowing down innovation and adoption (Acroplans, 2024).

The Need for Collaboration: A successful path forward requires deep collaboration. As highlighted by a Duke Clinical Research Institute think tank, experts from academia, industry, and government must work together to establish rigorous standards for data management, clinical validation, post-market surveillance, and transparency to ensure the safety and effectiveness of AI applications (PMC, 2025).

Frequently Asked Questions (FAQ)

Q1: Will Generative AI replace doctors or radiologists?

A: No. The overwhelming consensus is that Generative AI will function as a powerful co-pilot or augmentation tool, not a replacement. It is poised to handle data-intensive, repetitive tasks like drafting reports and summarizing histories. This allows clinicians to offload administrative burdens and focus on complex decision-making, patient empathy, procedural skills, and holistic care—areas where human expertise and connection are irreplaceable.

Q2: How can we trust the output of a “black box” AI?

A: Trust is not a given; it must be earned. It is built through a multi-pronged strategy: 1) Rigorous Validation: Continuously testing models against gold-standard clinical data and comparing their performance to expert physicians, as seen in recent meta-analyses. 2) Explainable AI (XAI): Focusing research and development on creating models that can articulate the “why” behind their recommendations, moving from opaque outputs to transparent reasoning. 3) Human-in-the-Loop: Ensuring a qualified clinician always makes the final decision, using the AI as an input or a “second reader,” not as an infallible oracle.

Q3: What is the first practical step for my hospital/clinic to adopt Generative AI?

A: Start small and strategically. A phased approach minimizes risk and maximizes learning. 1) Identify a High-Pain, Low-Risk Problem: Don’t begin with high-stakes, autonomous diagnostics. Instead, target a well-defined administrative bottleneck, such as drafting initial clinical notes or generating patient education materials. 2) Pilot a Proven Solution: Partner with a vendor that has a HIPAA-compliant, well-validated tool and a clear understanding of clinical workflows. 3) Measure and Scale: Run a pilot in a single department. Measure clear KPIs like time saved, clinician satisfaction, and documentation accuracy. Use these concrete results to build a business case and inform a broader, evidence-based rollout strategy.

👉 To explore how AI is also transforming the tools used in patient care, check out our post on AI in medical equipment revolutionizing healthcare.

Conclusion: The Doctor’s New Co-Pilot

Generative AI in healthcare is not a magic bullet, nor is it a passing fad. It is a foundational technology with a steep learning curve and immense transformative potential. The “myth” is that of autonomous AI replacing human experts. The “reality” is one of collaboration, where technology serves to augment and amplify human intelligence.

Its true value lies not in automating the art of medicine, but in automating the burdens that get in the way of it. Looking ahead, the technology will inevitably become more accurate, explainable, and seamlessly integrated into clinical workflows. The central challenge will shift from demonstrating technical feasibility to proving real-world clinical utility, safety, and equity through rigorous validation and clear regulatory pathways.

For clinicians, technologists, and administrators, the task ahead is not to wait for a perfect solution, but to engage critically, ask the tough questions, and collaboratively pilot these tools responsibly. By doing so, we can shape a future where technology genuinely serves to enhance human expertise and improve patient care.