Artificial Intelligence (AI) – a term that once belonged to the realm of science fiction is now an integral part of our daily lives. From the virtual assistants on our smartphones to the complex algorithms that power financial markets, AI is reshaping our world at an unprecedented pace. But what exactly is AI? This blog post aims to demystify artificial intelligence, exploring its definition, history, core concepts, transformative applications, current trends, and the exciting yet challenging future it promises.

Table of Contents

What is Artificial Intelligence? A Deeper Dive

At its core, Artificial Intelligence refers to the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. According to Britannica, the term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience. IBM further elaborates that AI is “technology that enables computers and machines to simulate human learning, comprehension, problem solving, decision making, creativity and autonomy” (IBM).

It’s crucial to distinguish AI from related terms:

- Machine Learning (ML): A subset of AI, ML is a method to train a computer to learn from its inputs without being explicitly programmed for every circumstance. As Britannica notes, “Machine learning helps a computer to achieve artificial intelligence.”

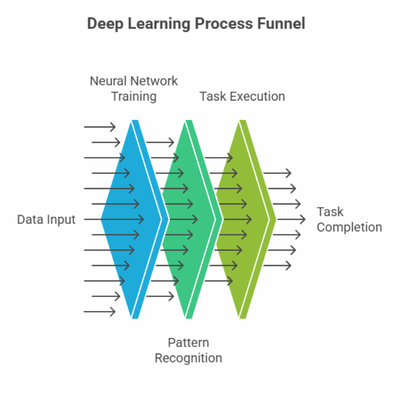

- Deep Learning (DL): A specialized subset of machine learning that involves training artificial neural networks with multiple layers to recognize patterns in data. Deep learning models are particularly effective for complex tasks like image and speech recognition, and natural language processing (Sunscrapers).

A Journey Through Time: Key Milestones in AI History

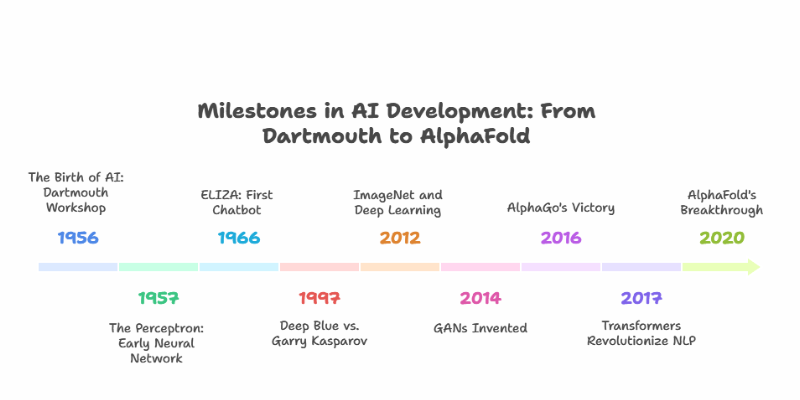

The concept of artificial beings endowed with intelligence dates back to antiquity, with myths and stories of automatons (Tableau). However, the formal field of AI research began in the mid-20th century.

- 1956: The Birth of AI: John McCarthy coined the term “artificial intelligence” at the Dartmouth Workshop, a pivotal event that brought together pioneers in the field (Iberdrola). McCarthy also drove the development of LISP, the first AI programming language, in the 1960s.

- 1957: The Perceptron: Frank Rosenblatt developed the Perceptron, an early neural network, laying groundwork for future machine learning models (Medium – Higher Neurons).

- 1966: ELIZA: Joseph Weizenbaum at MIT created ELIZA, an early natural language processing program that simulated conversation, often considered the first chatbot (Bernard Marr).

- 1970s-1980s: Expert Systems Era: AI found practical applications. For example, Digital Equipment Corporation’s XCON expert system reportedly saved the company $40 million annually by 1986 (Bernard Marr).

- 1997: Deep Blue vs. Garry Kasparov: IBM’s Deep Blue chess-playing computer defeated world champion Garry Kasparov, showcasing AI’s prowess in complex strategic games (Medium – Higher Neurons).

- 2012: ImageNet and Deep Learning: The ImageNet Large Scale Visual Recognition Challenge saw a deep learning model significantly outperform traditional methods, heralding the rise of deep learning (Medium – Higher Neurons).

- 2014: GANs Invented: Ian Goodfellow and colleagues introduced Generative Adversarial Networks (GANs), a class of machine learning frameworks (Royal Institution).

- 2016: AlphaGo’s Victory: Google DeepMind’s AlphaGo defeated world Go champion Lee Sedol, a feat considered much harder than chess due to Go’s complexity (Royal Institution).

- 2017: Transformers: The introduction of the Transformer architecture revolutionized natural language processing, forming the basis for large language models (LLMs) like GPT (Royal Institution).

- 2020: AlphaFold’s Breakthrough: DeepMind’s AlphaFold made significant progress in solving the protein folding problem, a major challenge in biology (Royal Institution).

The Building Blocks: Fundamental Concepts of AI

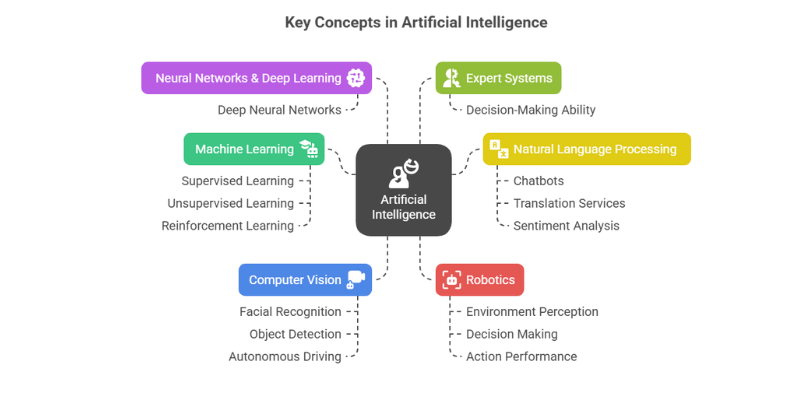

Understanding AI requires familiarity with its core concepts:

- Machine Learning (ML): As mentioned, ML enables systems to learn from data. Key types include:

- Supervised Learning: Training models on labeled data (input-output pairs).

- Unsupervised Learning: Training models on unlabeled data to find patterns or structures.

- Reinforcement Learning: Training agents to make decisions by rewarding desired behaviors and/or punishing undesired ones (Sunscrapers).

- Neural Networks & Deep Learning: Inspired by the human brain, neural networks are systems of interconnected “neurons” that process information. Deep learning uses neural networks with many layers (deep neural networks) to learn complex patterns from vast amounts of data.

- Natural Language Processing (NLP): A subfield of AI focused on enabling computers to understand, interpret, and generate human language. This powers chatbots, translation services, and sentiment analysis (Sunscrapers).

- Computer Vision: This field deals with how computers can gain high-level understanding from digital images or videos. Applications include facial recognition, object detection, and autonomous driving.

- Robotics: Integrates AI with physical machines, enabling robots to perceive their environment, make decisions, and perform actions.

- Expert Systems: AI systems that emulate the decision-making ability of a human expert in a specific domain.

AI in Action: Transforming Industries

AI is no longer a futuristic concept but a present-day reality, driving innovation across numerous sectors. Its versatility allows for personalized experiences in retail, predictive maintenance in manufacturing, and advanced analytics in healthcare, among many other applications (LeewayHertz).

Key industry applications include:

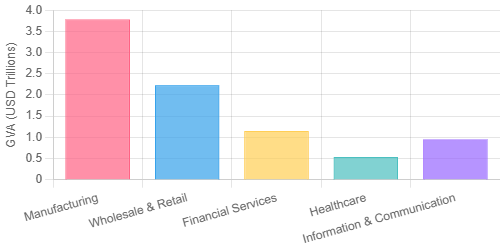

- Healthcare: AI aids in diagnostics (e.g., analyzing medical images), drug discovery, personalized treatment plans, and robotic surgery. The global AI in healthcare market was valued at $32.3 billion in 2024, with a projected CAGR of 36.4% from 2024 to 2030 (Vena Solutions). Studies show how AI for clinical documentation is already saving doctors significant time each day. For deeper insights, explore our full post on generative AI in healthcare.

- Finance: Used for fraud detection, algorithmic trading, credit scoring, risk management, and customer service chatbots. AI technology is projected to add $1 billion to the banking industry by 2025 (Exploding Topics).

- Retail: Powers recommendation engines, personalized marketing, supply chain optimization, inventory management, and customer service.

- Manufacturing: Enables predictive maintenance, quality control through computer vision, supply chain optimization, and robotics for automation. The manufacturing industry stands to gain an estimated $3.78 trillion from AI by 2035 (Exploding Topics, citing Accenture).

- Transportation: Development of autonomous vehicles, route optimization, traffic management, and logistics.

- Marketing and Sales: AI algorithms can increase leads by as much as 50% and improve revenue through personalized advertising and email marketing (Exploding Topics, citing Harvard Business Review and Statista).

Projected Gross Value Added (GVA) by AI in Key Industries by 2035 (USD Trillions)

The AI Revolution: Current Trends (2024-2025)

The AI landscape is evolving rapidly. As of 2025, several key trends are shaping its trajectory:

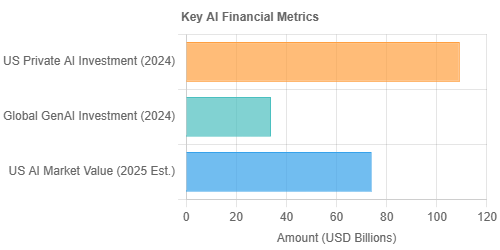

- Generative AI Explosion: Models like ChatGPT have brought generative AI to the forefront, capable of creating text, images, code, and more. Generative AI attracted $33.9 billion globally in private investment in 2024 (Stanford HAI – 2025 AI Index Report).

- Rise of Agentic AI: AI systems are becoming more autonomous, capable of performing complex tasks and making decisions with less human intervention. Microsoft predicts AI-powered agents will do more with greater autonomy (Microsoft News).

- Multimodal AI: Systems that can process and understand multiple types of data (text, images, audio, video) simultaneously are becoming more sophisticated.

- AI in Scientific Discovery: AI is accelerating research in fields like drug discovery, materials science, and climate change modeling. For instance, companies like Exscientia are using AI for drug discovery, with drugs currently in human trials (Exploding Topics).

- Increased Business Adoption: A significant majority of companies recognize AI’s strategic importance. In 2024, 78% of organizations reported using AI, up from 55% the previous year (Stanford HAI – 2025 AI Index Report). Exploding Topics reports that 83% of companies claim AI is a top priority in their business strategies (Exploding Topics).

- Focus on Responsible AI and Governance: As AI becomes more powerful, there’s a growing emphasis on developing and deploying it responsibly, addressing ethical concerns, and establishing governance frameworks.

AI Investment and Adoption Snapshot (2024 Data)

Beyond the Horizon: The Future of AI

The future of AI holds immense promise, with potential advancements that could redefine human capabilities and societal structures.

- Towards Artificial General Intelligence (AGI): While current AI excels at specific tasks (Narrow AI), AGI refers to AI with human-like cognitive abilities across a wide range of tasks. While AGI is still largely theoretical, some experts believe that approaches focusing on embodiment and interaction with the environment are crucial for its development (The Gradient, arXiv on Embodied AI).

- Embodied AI: AI systems that can interact with the physical world through sensors and actuators, like robots. This is seen as a critical step towards more general intelligence and has applications in robotics, autonomous systems, and human-AI interaction (Medium – Innovation Strategy).

- Hyper-Personalization: AI will likely drive even more personalized experiences in education, healthcare, entertainment, and commerce (BuiltIn.com).

- Solving Global Challenges: AI is expected to play a crucial role in addressing complex global issues such as climate change, disease eradication, and resource management (Microsoft News).

- AI and Environmental Impact: AI applications could improve sustainability, but the computational resources required for training large models also pose environmental concerns (Exploding Topics).

IBM predicts that between now and 2034, AI will become a fixture in many aspects of our personal and business lives (IBM).

The Double-Edged Sword: Ethical Considerations and Challenges

Despite its transformative potential, AI also presents significant ethical challenges and risks that society must navigate carefully.

“AI technology brings major benefits in many areas, but without the ethical guardrails, it risks reproducing real world biases and discrimination, fueling…” – UNESCO

Key ethical concerns include:

- Bias and Discrimination: AI systems are trained on data, and if that data reflects existing societal biases, the AI can perpetuate or even amplify them. This is a concern in areas like hiring, loan applications, and criminal justice (Harvard Gazette).

- Job Displacement: Automation driven by AI could lead to significant job losses in certain sectors, requiring societal adaptation and reskilling efforts. Experts foresee this leading to a rise in poverty and diminishment of human dignity (Pew Research).

- Privacy and Surveillance: AI-powered surveillance technologies, such as facial recognition, raise serious privacy concerns.

- Misinformation and Deepfakes: Generative AI can be used to create realistic but fake images, videos, and audio (deepfakes), which can be used to spread misinformation and manipulate public opinion.

- Accountability and Explainability: Determining who is responsible when an AI system makes a mistake can be challenging. Moreover, the decision-making processes of complex AI models (especially deep learning) can be opaque (“black box”), making it difficult to understand why a particular decision was made (IAC Georgia Tech).

- Human Autonomy: Over-reliance on AI for decision-making could erode human autonomy and critical thinking skills.

Addressing these challenges requires a multi-faceted approach involving robust governance, ethical guidelines, ongoing research into fair and transparent AI, and public discourse.

Conclusion: Navigating the AI Frontier

Artificial Intelligence is undeniably one of the most powerful and transformative technologies of our time. From its philosophical origins to its current ubiquitous applications and its mind-bending future potential, AI continues to evolve at a breathtaking pace. It offers incredible opportunities to solve complex problems, enhance human capabilities, and drive economic growth.

However, the journey into the AI frontier is not without its perils. The ethical considerations surrounding bias, job displacement, privacy, and accountability demand our urgent attention. As we continue to develop and integrate AI into the fabric of our society, a commitment to responsible innovation, ethical development, and inclusive dialogue will be paramount. The future of AI is not just about what technology can do, but about what we, as a society, choose to do with it.