Your smart assistant can order groceries, play music, or answer trivia. But what if it could also pick up the earliest signs of illness hidden in your voice? This isn’t science fiction—it’s the promise of AI Voice Biomarkers in Healthcare, where subtle changes in speech become powerful clues for early disease detection.

Our voice carries unique health signals, known as vocal biomarkers, that can reveal conditions like Parkinson’s, depression, or even cardiovascular issues—often before noticeable symptoms appear. By combining these biomarkers with AI chatbots, healthcare is shifting from reactive treatment to proactive, continuous monitoring. The result? Early detection that is more accessible, affordable, and non-invasive than ever before.(The Use of Vocal Biomarkers from Research to Clinical Practice, PMC).

In this article, we’ll explore how AI deciphers vocal biomarkers, real-world breakthroughs in detecting complex diseases, the startups driving this revolution, and the ethical challenges that must be addressed.

Table of Contents

The Science Behind the Sound: How AI Deciphers Vocal Biomarkers

The idea that our voice reflects health is nothing new—clinicians have always listened for slurred speech or weak tones as warning signs. What’s new is the ability of AI voice biomarkers in healthcare to measure these subtle changes with superhuman precision. Today, AI can analyze not just what we say, but how we say it, unlocking an entirely new layer of physiological insight.

What Are Vocal Biomarkers? The Health Signature in Your Speech

In simple terms, a vocal biomarker is a measurable, objective characteristic of the voice that is associated with a specific health condition. Think of it as a unique ‘vocal fingerprint’ that changes when our physical or mental health is affected. As defined in a comprehensive review, a vocal biomarker is a feature or combination of features from an audio signal that can be used to monitor patients, diagnose a condition, or grade its severity (The Use of Vocal Biomarkers from Research to Clinical Practice, PMC).

The link between voice and health is direct. Disorders affecting the brain, muscles, lungs, or vocal folds inevitably alter the mechanics of speech. While these changes are often too subtle for the human ear, AI voice biomarkers in healthcare can detect and quantify them with remarkable accuracy, opening a powerful new path for early diagnosis.

Key acoustic features that AI models analyze include:

- Pitch & Prosody: This refers to the melody, rhythm, and intonation of speech. For example, in patients with depression, speech prosody often becomes monotonous or “flat,” a change that AI can quantify (Vocal Acoustic Features as Potential Biomarkers for Identifying Depression, PMC).

- Jitter & Shimmer: These are micro-variations in vocal frequency (jitter) and amplitude (shimmer). Elevated levels of jitter and shimmer can indicate a loss of fine motor control over the vocal muscles, a common and early symptom in conditions like Parkinson’s disease (Explainable artificial intelligence to diagnose early Parkinson’s, Nature).

- Speech Rate & Pauses: The speed of talking, the length of pauses, and the frequency of hesitations or filler words (“um,” “ah”) can be powerful indicators of cognitive load or decline. These features are particularly relevant for detecting mild cognitive impairment (MCI) and Alzheimer’s disease (AI-based voice biomarker models to detect MCI, The Lancet).

- Articulation: The clarity and precision of consonants and vowels can degrade due to conditions affecting motor control, such as stroke or multiple sclerosis.

- Spectral Features (MFCCs): This is where AI’s real analytical strength emerges. Mel-Frequency Cepstral Coefficients (MFCCs) allow AI to “hear” the texture and quality of the voice in ways similar to the human ear, which perceives sound frequencies non-linearly. By breaking down these patterns, MFCCs reveal subtle vocal traits that often go unnoticed—yet can strongly indicate conditions like depression or Parkinson’s disease (Exploring MFCCs for interpretable speech biomarkers, PMC).

The AI Engine: From Soundwave to Health Insight

The process of turning a raw audio recording into a potential health alert is a multi-step machine learning pipeline. This is far more complex than the simple voice recognition used by consumer smart assistants, which focuses on transcribing words. Vocal biomarker analysis is about deconstructing the soundwave itself.

The typical pipeline involves the following stages:

- Data Collection: The process starts with recording a voice sample, often through a smartphone or simple device. This may involve reading a set passage for consistency or giving a natural, free-flowing response like “Tell me about your day.” In the context of AI voice biomarkers in healthcare, these recordings capture subtle vocal patterns that AI can later analyze for early signs of disease (Evaluation of an AI-Based Voice Biomarker Tool, Annals of Family Medicine).

- Feature Extraction: Once a voice sample is collected, AI processes the raw audio and breaks it into measurable features. This step, called feature engineering, extracts hundreds of acoustic signals such as jitter, shimmer, and MFCCs. These data points turn the soundwave into a structured dataset that machine learning models can analyze. In AI voice biomarkers in healthcare, this process is critical for detecting subtle voice changes linked to early disease signs (Feature Engineering in Speech Recognition, Medium).

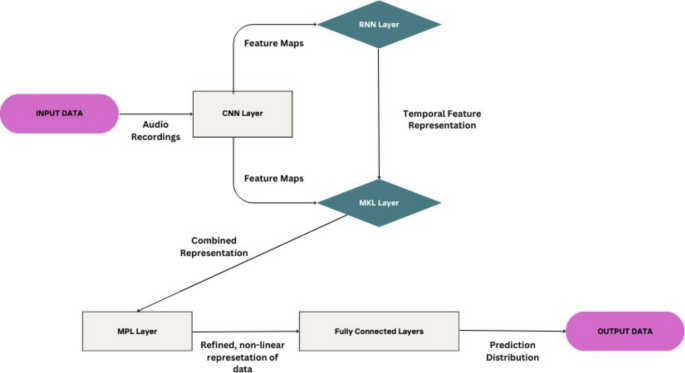

- Model Training: This is the core of the AI system. Deep learning models—like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs)—are trained on large datasets of voice samples, each linked to a confirmed diagnosis such as healthy, Parkinson’s, or depression. CNNs detect patterns in spectrograms (visual sound maps), while RNNs capture the timing and flow of speech. Together, they enable AI voice biomarkers in healthcare to recognize subtle vocal changes that reveal early signs of disease (Explainable AI to diagnose early Parkinson’s, Nature).

- Prediction & Classification: After training, the AI compares a new voice sample with learned patterns and predicts the outcome—such as “Depression detected” or a risk score for a condition. In AI voice biomarkers in healthcare, this step turns speech into clear, actionable insights.

Making AI Trustworthy: The Role of Explainable AI (XAI)

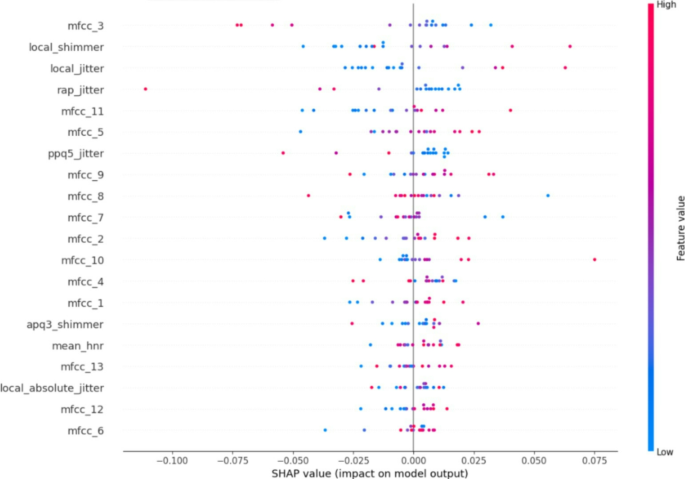

One major challenge in adopting AI for healthcare is the “black box” problem—clinicians hesitate to trust a diagnosis without knowing how it was made. This is where Explainable AI (XAI) steps in. XAI makes model decisions transparent, showing which voice features actually influenced the result. For example, a study on Parkinson’s disease used SHAP (SHapley Additive exPlanations) to highlight vocal traits like mfcc_3, local_shimmer, and local_jitter as key drivers of diagnosis. With this clarity, AI voice biomarkers in healthcare become more trustworthy, turning AI into a transparent partner rather than a mysterious oracle.

From Theory to Clinic: Real-World Use Cases in Disease Detection

The potential of AI-powered voice analysis is not merely theoretical. Across the globe, researchers and startups are translating this science into clinical applications, with remarkable results in several key areas of medicine.

Case Study: Detecting the Tremor in the Voice for Parkinson’s Disease

The Why: Parkinson’s disease is a progressive brain disorder best known for tremors, but one of its earliest signs is subtle voice changes (dysphonia). As the disease affects the tiny muscles controlling speech, vocal shifts often appear years before noticeable motor symptoms—creating a crucial window for early detection through AI voice biomarkers in healthcare (The Use of Vocal Biomarkers, PMC).

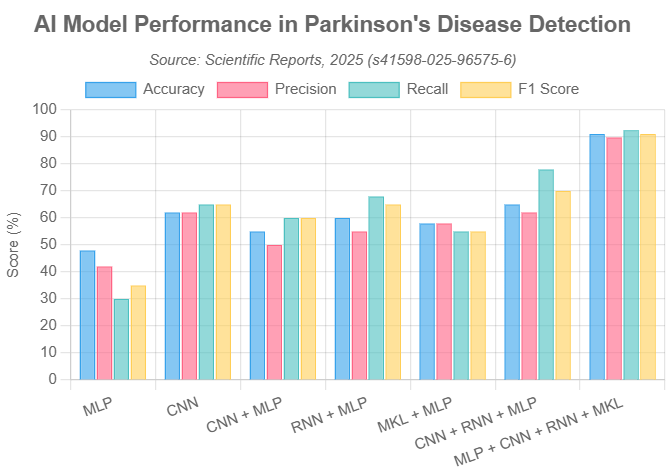

The Evidence: Studies show strong results. A 2025 Scientific Reports study using a hybrid deep learning model (combining CNN, RNN, MKL, and MLP architectures) achieved 91% accuracy in detecting Parkinson’s from voice alone (Explainable artificial intelligence to diagnose early Parkinson’s, Nature). Reviews of similar research report accuracy rates between 78–96%. These findings highlight how AI voice biomarkers in healthcare can provide a non-invasive, low-cost, and rapid alternative to traditional tests like imaging or lengthy clinical exams(AI-driven precision diagnosis in Parkinson’s, 2025).

The chart above, based on data from the *Scientific Reports* study, illustrates the superior performance of a hybrid AI model (`MLP + CNN + RNN + MKL`) compared to simpler models across key metrics like accuracy, precision, recall, and F1 score, consistently achieving scores around 90%.

Startup Spotlight: Companies like Aural Analytics and Winterlight Labs are leading this field. Aural Analytics, based in Arizona, develops speech analysis tools to track neurological conditions such as Parkinson’s, ALS, and stroke recovery. Canadian firm Winterlight Labs uses AI voice analysis to detect and monitor cognitive disorders, including Alzheimer’s and Parkinson’s, often supporting clinical trials (Leading Companies in the Global Vocal Biomarkers Market 2025).

Case Study: Hearing the Weight of Depression and Anxiety

The Why: Mental health strongly influences how we speak. Depression often causes slower speech, longer pauses, flatter tone, and reduced emotional expression. These vocal changes, captured through AI voice biomarkers in healthcare, provide measurable signals that complement traditional self-report questionnaires (Vocal Acoustic Features for Identifying Depression, PMC).

The Evidence: A landmark study in The Annals of Family Medicine tested Kintsugi’s AI tool, which analyzed short speech samples and compared them to the PHQ-9 depression screening test. It showed 71% sensitivity and 74% specificity, proving useful as a decision-support tool. Other studies also confirm that AI voice biomarkers in healthcare can reliably predict depression severity, closely aligning with clinical scales like HAMD-17 (Fast and accurate assessment of depression based on voice, Frontiers in Psychiatry).

Startup Spotlight: Kintsugi and Sonde Health are driving innovation in AI voice biomarkers in healthcare. Kintsugi develops tools to detect depression and anxiety, embedding them into telehealth and call centers for scalable mental health screening. Sonde Health applies AI voice analysis to both mental and respiratory health, making the voice a vital sign for proactive care.

Promising Frontiers: What’s Next for Vocal Biomarkers?

The application of voice analysis in healthcare extends far beyond Parkinson’s and depression. Researchers are actively exploring its potential across a wide spectrum of conditions:

- Alzheimer’s & Cognitive Decline: AI voice biomarkers in healthcare can spot subtle speech changes—like word-finding issues or reduced grammar complexity—that signal early cognitive decline. In one study, AI speech analysis predicted Alzheimer’s progression with over 78% accuracy (NIA, AI speech analysis for Alzheimer’s).

- Cardiovascular Disease: In a groundbreaking study, a team from the Mayo Clinic identified specific voice features associated with a higher risk of coronary artery disease, suggesting that the voice may hold clues about the health of our vascular system (Mayo Clinic News Network).

- Respiratory Illnesses: The COVID-19 pandemic accelerated research into using AI voice biomarkers in healthcare to detect respiratory symptoms. Startups like TalkingSick analyze vocal changes from infections such as COVID-19 or the flu, offering pre-symptomatic screening by spotting deviations from a person’s healthy voice baseline (Penn State News).

- Stroke: Stroke often alters speech, causing mispronunciations and unnatural intonation. A Korean study showed that using AI voice biomarkers in healthcare, an AI system could analyze patient voices with 99.6% accuracy, enabling early detection and prevention of complications (AI-Based Speech Analysis for Stroke, PMC)

The Double-Edged Sword: A Revolution in Care vs. The Risks Within

The promise of turning every smartphone into a potential diagnostic tool is immense, but it comes with profound responsibilities. As we stand on the cusp of this new era, it is crucial to weigh the transformative benefits against the significant ethical, privacy, and regulatory challenges.

The Upside: A New Era of Proactive, Accessible Healthcare

The advantages of integrating AI and voice biomarkers into healthcare are compelling and could fundamentally reshape how we manage health:

- Accessibility and Equity: One of the greatest benefits of AI voice biomarkers in healthcare is democratizing screening. With just a smartphone, people in rural or underserved areas can access early detection tools, overcoming geographic and socioeconomic barriers to timely care (Explainable AI to diagnose early Parkinson’s, Nature).

- Cost-Effectiveness: AI voice biomarkers in healthcare make diagnosis faster and far cheaper than invasive tests or costly scans like MRIs and PET CT Scan. This approach can greatly ease the financial burden on both patients and healthcare systems.

- Continuous and Non-Invasive Monitoring: With AI voice biomarkers in healthcare, voice samples can be collected anytime, anywhere—without discomfort. This enables continuous remote tracking of disease progression or treatment response, giving clinicians richer insights than occasional clinic visits (AI listens for health conditions, Nature).

- Early Intervention: By catching diseases in their earliest stages—sometimes before a patient is even aware of symptoms—vocal biomarkers enable earlier intervention, which is critical for improving outcomes in progressive conditions like Parkinson’s and Alzheimer’s.

The Downside: Navigating Critical Ethical and Regulatory Hurdles

With great power comes great responsibility. The widespread adoption of AI voice analysis in healthcare is contingent on successfully navigating a minefield of ethical and logistical challenges.

- Data Privacy & Security: The voice is one of the most personal biometrics, raising urgent questions—who owns the data, how it’s stored, and how it’s protected. With AI voice biomarkers in healthcare, strict compliance with HIPAA and GDPR is vital to ensure encryption, consent, and security (Ethical and legal considerations in healthcare AI, PMC). Still, risks like voice spoofing and deepfakes remain, demanding advanced anti-spoofing safeguards (Voice Recognition and Security, RINF.tech).

- Algorithmic Bias: A major challenge for AI voice biomarkers in healthcare is bias in training data. If models are built mainly on one demographic, their accuracy can drop for women, older adults, or people with different accents—risking misdiagnosis and widening health disparities. The solution lies in creating diverse, representative datasets to ensure fairness and reliability (Fairness of artificial intelligence in healthcare, PMC).

- Accountability & Human in the Loop: A key ethical issue in AI voice biomarkers in healthcare is responsibility when errors occur—should it fall on developers, hospitals, or clinicians? Experts agree these tools must act as decision-support, not replacements. Keeping a “human in the loop” ensures a qualified clinician reviews AI outputs and makes the final medical decision (As AI in medicine takes off, can ‘human in the loop’ prevent harm?, STAT News).

- Regulatory Oversight: Authorities are moving quickly to regulate AI voice biomarkers in healthcare. In the US, the FDA classifies many of these tools as Software as a Medical Device (SaMD) (FDA AI/ML SaMD Action Plan), requiring strict validation of safety and effectiveness. In Europe, the EU AI Act labels healthcare AI as “high-risk,” mandating transparency, high-quality data, and strong human oversight (EU AI Act, European Parliament).

Frequently Asked Questions (FAQ)

Q1: Is my smart speaker or phone secretly diagnosing me?

A: No. Commercial voice assistants like Alexa, Siri, or Google Assistant are not designed or regulated for medical diagnosis. The **AI voice biomarker** tools discussed here are specialized, medical-grade applications that operate under strict healthcare regulations like HIPAA. They require your explicit consent to collect and analyze your voice for health purposes. Your private conversations are not being analyzed for signs of illness without your knowledge and permission.

Q2: How accurate is this technology? Can it replace a doctor?

A: The accuracy is highly promising and continually improving, with some studies reporting over 90% accuracy for specific, well-defined tasks like Parkinson’s screening (AI-driven precision diagnosis in Parkinson’s, 2025). However, it is absolutely not a replacement for a doctor. The consensus among experts is that this technology should be viewed as an early warning or screening tool—much like a smoke detector. It can alert you to a potential problem, but a trained professional (a firefighter, or in this case, a doctor) is essential to confirm the issue, conduct a full diagnostic workup, and determine the appropriate course of action. The “human in the loop” remains indispensable.

Q3: When will I be able to use these tools?

A: Some of these tools are already being used in controlled environments, such as clinical trials and within specific telehealth platforms or healthcare systems that have partnered with technology providers. Widespread public availability is still on the horizon, as companies must navigate the lengthy and rigorous processes of clinical validation and regulatory approval from bodies like the FDA. However, the field is advancing rapidly. Given the current pace of research and investment, it is reasonable to expect these technologies to become more integrated into mainstream healthcare over the next 3-5 years.

Conclusion

Key Takeaways

- The human voice contains measurable **vocal biomarkers** that can indicate the presence of neurological, mental, and physical health conditions.

- **AI and machine learning** can analyze these biomarkers with high accuracy, offering a non-invasive, scalable, and cost-effective method for early disease detection.

- Promising applications are emerging for **Parkinson’s disease, depression, Alzheimer’s**, and even cardiovascular and respiratory illnesses.

- Critical ethical challenges, including **data privacy, algorithmic bias, and accountability**, must be addressed through robust regulation and a “human-in-the-loop” approach.

- The future is not about AI replacing clinicians, but **augmenting their capabilities**, leading to a more proactive and personalized healthcare system.

The fusion of **AI chatbots, voice biomarkers, and healthcare** is poised to be one of the most transformative developments in digital medicine. It promises a future where early disease detection is not a luxury afforded by expensive tests, but an accessible, continuous part of our daily lives. The technology is powerful, the science is sound, and the potential to save lives and improve outcomes is immense.

However, this promise is inextricably linked to critical responsibilities. As a society, we must move forward with intention, proactively addressing the ethical challenges of privacy, bias, and security to ensure this technology is deployed equitably and safely. The goal is not to create an automated healthcare system devoid of human touch, but to augment the capabilities of our skilled clinicians.

The ultimate vision is a healthcare system where your voice becomes a vital, continuous part of your health record, empowering you and your care team with the insights needed to act sooner, intervene more effectively, and live healthier lives.

This technology raises as many questions as it answers. What are your biggest hopes or concerns about AI listening to your voice for health signs? Share your thoughts in the comments below!